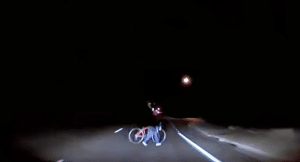

The first fatality involving a “self-driving” vehicle and a pedestrian occurred in Tempe, Arizona on March 18, 2018.

The self-driving Uber vehicle was traveling northbound on Mill Avenue near the intersection with East Curry Road/Washington Street in Tempe, Arizona. The self-driving Uber was in the right lane of two northbound through lanes. In the area of the collision, there are four (4) total lanes, two northbound through lanes and two left-turn-only lanes. The pedestrian, who was walking a bicycle at the time, was crossing from west-to-east (left-to-right) in front of the self-driving Uber.

The pedestrian was present in the roadway for a distance of over 40 feet. Assuming a walking speed of a pedestrian with a bicycle of 5 feet-per-second, this puts the pedestrian in the roadway for over 8 seconds. The self-driving Uber was travelling at a speed of 38 mph (56 feet per second), the self-driving Uber was approximately 450 feet from the point of impact when the pedestrian first stepped into the roadway.

Based on the video, neither the vehicle nor the human safety driver detected the pedestrian prior to impact. The point of impact was to the right front area of the vehicle, and the pedestrian had passed the centerline of the vehicle.

Video taken from onboard cameras in the self-driving Uber shows the operator did not have his eyes on the road or hands on the wheel before the crash occurred.

What Failed With the Uber Self-Driving Vehicle?

Public comments made by Raj Rajkumar, who leads the AV research team at Carnegie Mellon University, said, “The car’s LiDAR should have picked the pedestrian up far before it hit her. Clearly there’s a problem, because the radar also should have picked her up throughout, she was moving.”

“This was a straightforward scenario that LiDAR should have detected,” says Ram Vasudevan, an assistant professor of mechanical engineering at the University of Michigan who has worked extensively with autonomous systems. “This was a tragedy that was avoidable.”

Uber may not be using enough, or the necessary kind of sensors for self-driving at night (or daytime for that matter) or its algorithms or calibrations need to be adjusted. This is analogous to airbag crash sensing for both frontal and side impacts, where automotive manufacturers tried to use too few sensors or the cheap sensors, or failed to include algorithms or calibrations necessary for good performance.

Questions abound, such as: whether, a software algorithm misinterpreted the situation? Did the LiDAR fail? However, the consensus remains there was failure at all levels, not only with technology, but with only having one human back-up driver. (Most companies have two, one to monitor the road and one to monitor the equipment) Here, dash cam video released after the crash, shows the one back-up-driver in the vehicle appears to be distracted and looking down in the moments before the crash.

The Hand-off Problem

This crash also highlights the hand off problem. Implicit in a relationship between car and human is the “handoff problem,” or the flawed expectation that driving responsibility can be safely passed from machines to humans in a split-second. Studies have shown that human drivers rapidly lose their ability to focus on the road when a machine or robot is doing most of the work. It is well known humans are terrible babysitters of automation, so much so that several manufacturers have decided to bypass the human altogether and remove the steering wheel and gas pedal from the vehicle.

Uber’s Competitor’s Claim Their Technology and Software Would Have Avoided this Fatality

John Krafcik, CEO of Waymo, Google’s self-driving car project, said the company has “a lot of confidence” its own self-driving vehicle technology could have detected the pedestrian who was killed by the self-driving Uber in Tempe, Arizona.

“Based on our knowledge of what we’ve seen so far, in situations like that one, we have a lot of confidence our tech would be robust and would be able to handle situations like that one,” said Krafcik.

Mobileye CEO, Amnon Shashua, claimed in public statements his company’s “computer vision system would have detected the pedestrian who was killed in Arizona by a self-driving Uber vehicle, and called for a concerted move to validate the safety of autonomous vehicles.”

Volvo and its CAT Supplier, Aptiv Plc, also tried to distance themselves from the Uber crash making public announcements that Uber disabled Volvo’s collision avoidance system, Aptiv Plc, the maker of the radar and camera system used on the Volvo XC90, wanted to ensure that the public is not “confused” or “think it was a failure of the technology that we supply for Volvo, because that’s not the case,” according to Aptiv spokesman, Zach Peterson.

Public Highways are Not Proving Grounds- It took a fatality for force Companies to Rethink the Approach to Testing Autonomous Vehicles

After the self-driving Uber test vehicle operating in autonomous mode struck and killed a pedestrian, manufacturers, suppliers and regulators have been forced to re-evaluate the development of self-driving technology. To make these vehicles and their testing programs safe, companies need to recognize their limits and respond accordingly.

While core autonomous vehicle technology, such as cameras, radar, LiDAR and decision-making algorithms, has become much more sophisticated, test vehicles still struggle with basic maneuvers that human drivers would easily handle. Often these situations involve predicting and responding to the actions of human-driven vehicles and pedestrians, meaning human lives are at stake.

Documents made public after the Uber self-driving fatality indicate that Uber’s autonomous vehicles had difficulty driving through construction zones and next to tall vehicles, like big rigs, and its drivers “had to intervene far more frequently than the drivers of competing autonomous car projects.” Uber was struggling just to meet its target of 13 miles per ‘intervention’ in Arizona. By comparison, Waymo says its vehicles average about 5,600 miles per intervention by the safety drivers.

Compared with a controlled environment, self-driving cars have a harder time navigating streets in busy city centers, where a multitude of pedestrians, human-driven cars, light rails, cyclists and other actors complicate their decisions, not to mention the weather.

Ironically, one of these struggles is with picking up and dropping off passengers. Companies such as Uber, Waymo and General Motors’ Cruise Automation target autonomous ride-hailing fleets, or robotaxis, as the first vehicles to use self-driving car technology. But these cars could face challenges replicating the customer experience. For instance, Uber and Lyft customers in out-of-the-way locations can call drivers and direct them verbally, often requesting the driver find them in a crowd of people or down side roads.

Another problem for Automated Vehicles is geography. To safely navigate hills, cars need to be able to sense vertically as well as they do laterally — a task current sensors struggle to tackle. The sensors’ placement, such as behind the windshield, within the fascia or on the side, can limit whether they’re able to accurately detect objects when a car is going up or down.

The pressure to be first will force some companies, the irresponsible ones, to do sloppy things. It is unclear how many things went wrong in the Uber that struck and killed a pedestrian. But it wasn’t just one thing.

Tech companies use this kind of approach all the time: They introduce software before it is 100 percent ready and let the public find errors and flaws in the system. And then they upgrade as they need to. That’s fine when you’re talking about a phone or a website. But when you’ve got a heavy vehicle speeding along with no one paying attention behind the wheel, it’s too dangerous to put those cars on the road.